Why perfect detection is impossible—and why claiming it miseducates the market

100% detection

100% protection

It sounds definitive. Reassuring.

It’s also fundamentally false—not just in practice, but in theory.

When a cybersecurity vendor claims 100% detection, they aren’t merely exaggerating. They are misinforming buyers, miseducating decision-makers, and setting expectations no security system—now or ever—can meet.

That’s not opinion.

That’s mathematics.

Detection Cannot Be Perfect—By Definition

100% Detection Is Not Security—It’s Storytelling

At the core of computing lies a proven limit: the Halting Problem, formalized by Alan Turing.

In plain terms:

There is no algorithm that can analyze all programs and always determine how they will behave.

Translated to cybersecurity:

- You cannot fully predict runtime behavior

- You cannot pre-classify every future binary

- You cannot distinguish “good” from “bad” code with certainty

- You cannot achieve 100% detection without either blocking legitimate software or missing novel attacks

This isn’t a tooling gap.

It’s a law of computation.

Claiming otherwise means redefining the problem—or hoping the audience won’t notice.

“But They Mean MITRE…”

Yes, vendors will clarify that 100% detection refers to MITRE ATT&CK evaluations.

That distinction matters—but only if the buyer understands it.

Most don’t.

MITRE is:

- controlled

- retrospective

- scripted

- limited to a subset of known techniques

It does not:

- predict future attacks

- measure unknown tradecraft

- represent live adversarial adaptation

MITRE is valuable—but it is not reality.

Leading with “100% detection” in a hero banner anchors perception long before nuance appears. That’s not education. It’s marketing sleight of hand.

If 100% Detection Were Real, Breaches Wouldn’t Exist.

100% Detection Is Just Predicting the Future

Detection is inherently backward-looking.

Attackers are not.

Claiming perfect detection is equivalent to saying:

“We can predict every future attack technique before it exists.”

No serious discipline claims this.

- Finance doesn’t promise 100% fraud prevention

- Aviation doesn’t promise 100% failure prediction

- Medicine doesn’t promise 100% diagnosis accuracy

Cybersecurity should not pretend it’s exempt from uncertainty.

The Accountability Gap No One Talks About

Here’s the uncomfortable question:

If vendors are so confident in 100% detection, why don’t they publish real-world historical detection statistics?

Other industries do:

- insurance publishes loss ratios

- cloud providers publish uptime SLAs

- aviation publishes incident rates

Cybersecurity vendors publish… marketing.

Meanwhile, when breaches happen:

- victims are forced to disclose

- customers are notified

- reputations are damaged

But when a vendor’s “100% detection” fails?

Nothing.

No public miss rates.

No failure disclosures.

No accountability.

The buyer absorbs the consequences.

The seller keeps the slogan.

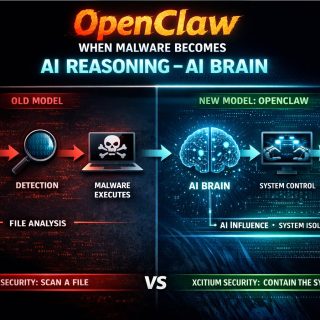

Detection Is Not Protection

Detection asks: “Did I recognize this?”

Protection asks: “Did damage occur?”

They are not the same.

A system can detect nothing and still prevent harm.

A system can detect everything it knows and still fail catastrophically on what it doesn’t.

Claiming 100% detection avoids the harder, more honest question:

What happens when detection inevitably fails?

The Honest Position the Industry Avoids

Real cybersecurity begins with one admission:

We cannot predict the future.

Once you accept that, better architectures emerge:

- assume novelty

- assume detection failure

- reduce blast radius

- prevent damage without certainty

That’s how mature engineering disciplines evolve.

Until then, “100% detection” remains what it truly is:

- a mathematical impossibility

- a miseducation of buyers

- and a disservice to cybersecurity as a profession

If someone promises you 100% detection, ask one question:

“Of what—past attacks, or the future?”

Because only one of those is knowable.